···

On 22 December 2015 at 23:49, Anubhav Jain [email protected] wrote:

Hi Felipe,

If you used different variable names for update_spec, they should not have overwritten one another.

Do you know if the code works OK when it is not in offline mode?

I have just written the following small test script and it runs without problems:

https://github.com/materialsproject/fireworks/blob/master/fireworks/examples/custom_firetasks/merge_task/merge_task.py

But I did not try offline mode.

Best,

Anubhav

–

On Tue, Dec 22, 2015 at 2:21 PM, felipe zapata [email protected] wrote:

Hi Anubhav,

I’m probably doing something wrong, but in my program I am using a unique identifiers to label the parameters that are passed to the children, something like

return FWAction(update_spec={“var_<some_unique_id>”: my_dependency})

these unique identifiers are used to label the arguments that are passed from parents to children but it does not work because there are some missing dependencies. It seems like the last computed parent process is the only one that is communicating its dependencies to the children, while the other parents do not pass any message at all. I am surprised because I think that fireworks makes a kind of merge-dependencies before submitting the children processes.

But anyway, passing the arguments using mod_spec is the way to go.

Thank you for the prompt answer.

Best,

Felipe

On 22 December 2015 at 18:54, Anubhav Jain [email protected] wrote:

Hi Felipe,

I think that what you are describing is that all parents are calling “update_spec” on the same key. e.g., you have 6 parents that all return something like this:

return FWAction(update_spec={“param1”: my_dependency})

In this case, the “param1” variable for the child will indeed be overwritten to the my_dependency value for the last parent. This is because the update_spec performs a dict update command (which overwrites the previous value of “param1”).

I think that what you probably want is to use the mod_spec variable:

return FWAction(mod_spec=[{’_push’: {‘param1’: my_dependency}}])

Then, each parent will push its dependency value onto an array, i.e., param1 in the child will be an array containing all dependency values. You can look at the class DictMods in the FWS source to see all the possible commands like _push (e.g. _set, _unset, _inc, etc).

If you are looking for a simple runnable example, you might try this tutorial ("A workflow that passes data):

http://pythonhosted.org/FireWorks/dynamic_wf_tutorial.html

There are other ways to do it, e.g., by using different keys (not “param1”) for the different parents.

Best,

Anubhav

Felipe Zapata

Postdoctoral research fellow

Vrije Universiteit Amsterdam

Division of Theoretical Chemistry

De Boelelaan 1083

NL-1081 HV Amsterdam

–

On Tue, Dec 22, 2015 at 9:11 AM, felipe zapata [email protected] wrote:

Dear All,

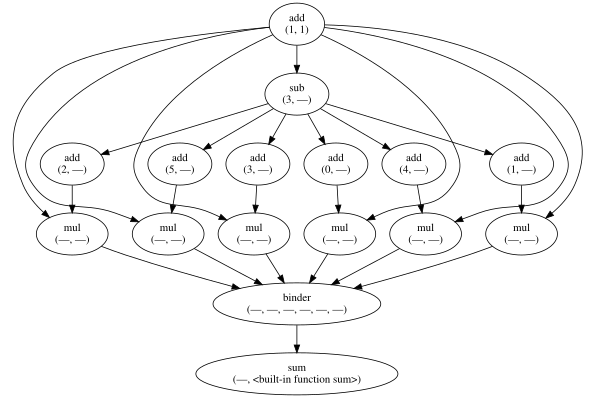

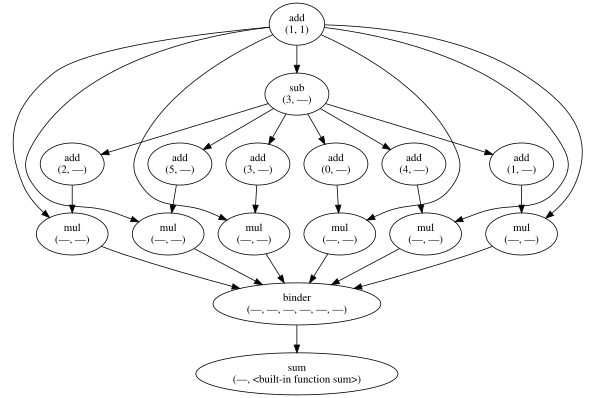

Let suppose that I have a workflow like the one depicted in the image below. For the sake of simplicity the nodes represent some simple computations, but they can be complex calculations.

Every single node of the graph is computed in a queue system (Slurm in this case). Also, the computations are performed in offline mode. Everything works well if the node has only one parent, but when the node has multiple parents things does not work as expected. For example, In the graph shows below there is a node called binder, this binder function has several explicit dependencies in its parents nodes. So, the fireworks workflow has a set of connections like

*connections = {id_mul1 : [id_binder], id_mul2 : [id_binder], …and so on…} *

Workflow(task, links_dict=connections)

The problem that I’m having is that I pass arguments from parents to children using update_spec, but apparently binder is only called with only the dependencies of one parent, the last parent to be execute. So, when binder is executed it is complaining that can find the other dependencies.

How Can I pass all the parents dependencies the children processes?

Best,

Felipe

–

You received this message because you are subscribed to the Google Groups “fireworkflows” group.

To unsubscribe from this group and stop receiving emails from it, send an email to [email protected].

To post to this group, send email to [email protected].

Visit this group at https://groups.google.com/group/fireworkflows.

To view this discussion on the web visit https://groups.google.com/d/msgid/fireworkflows/CA%2BAeLgQ9S-LO-b-X9gY2GBwpg76Tm7wmBWumFeExjc50T355vA%40mail.gmail.com.

For more options, visit https://groups.google.com/d/optout.

Felipe Zapata

Postdoctoral research fellow

Vrije Universiteit Amsterdam

Division of Theoretical Chemistry

De Boelelaan 1083

NL-1081 HV Amsterdam